Chapter 3 Corpus Preparation and Selection Strategy

The first part of this chapter describes a preparation workflow which is generally applicable to all corpora recently prepared in the context of the PolMine project. The selection strategy described in the second part applies to the most recent version of the MigParl corpus. For in-depth information about the preparation and selection process of older versions of the corpus, see the individual chapters for the versions’ data reports and technical documentation.

3.1 Corpus Preparation Workflow

The goal of the preparation workflow described in the following the transformation of - speaking from a computational perspective - sparsely structured textual data into a sustainable resource for research. Techically, we collect plenary prococols (predominantly pdf documents) and turn them into TEI-compatible XML files. These TEI-XML files are then imported into the Corpus Workbench (Evert and Hardie 2011) which makes handling large text corpora for a variety of scientific applications managable.

The preparation of the TEI version of MigParl implements the following workflow:

Preprocessing: Prepare consolidated UTF-8 plain text documents (ensuring uniformity of encodings, conversion of pdf to txt if necessary);

XMLification: Turn the plain text documents into TEI format: Extraction of metadata, annotation of speakers etc.;

Consolidation: Consolidating speaker names and enriching documents.

For the preparation of the plain text documents the trickypdf package was used, which was developed in the context of the PolMine project. Because plenary protocols are provided in a two-column layout almost exclusively, the development of this package was a prerequisite for the preparation of clean input data for the following processing steps. As an exception, Hesse did provide Microsoft Word documents (.doc) for the time until 2002. These documents where converted into plain text as well.

The conversion of plain text files in structurally annotated XML documents is provided by the framework for parsing plenary protocols or frappp, which has been developed in the PolMine project. frappp is used to facilitate a workflow in which regular expressions are formulated for patterns which can be annotated with XML elements. This is the case for metadata, speakers, interjections and agenda items in particular. False positives and false negatives are handled by both a list of known mismatches and preprocessing steps which cleans up some faulty input data. In consequence, the process is fundamentally automated, however, manual quality control is strictly necessary to a considerable extent.

Particular care has to be taken to ensure that identified speakers are consistently named. This is done with reference to external data sources of names which are complemented with a list of known aliases. The primary external data source used for the MigParl data is Wikipedia (Blätte and Blessing 2018: 813). One remaining challenge is the time-dependency of these external checks. Sometimes the information in the protocol and the information in the external data might differ. A member of parliament might change parties within a legislative period. In this case, our approach will label this member’s party affiliation with the one found in the external data source. The same is true with names. If the protocol says “Ulla Schmidt” and the external data source “Ursula Schmidt”, then the external information is used. This external data is stored in a Git repository.

Ultimately, we arrive at a machine-readable version of the protocols which can be linguistically annotated. For this, the XML/TEI version of the initial plenary protocols is taken through a pipeline of standard Natural Language Processing (NLP) tasks. Stanford CoreNLP is used for tokenization, part-of-speech (POS) and named-entity (NE) annotation (Manning et al. 2014). To add lemmata to the corpus, TreeTagger is used (Schmid 1995).

3.2 Selection Strategy

MigParl is a thematic subset of plenary protocols relevant for migration and integration research. As such, a selection strategy was necessary to determine this relevance from a base population of all protocols of the German regional state. We follow a two-pronged selection approach.

3.2.1 Strategy Overview

3.2.1.1 Topic Model-Based Selection

- topic modelling: a topic model for each of the regional state parliament corpora was calculated and the 100 most relevant terms per topic retrieved. The number of topics to be calculated, which acts as a hyperparameter of the algorithm, was determined with a preceding optimization process

- topic identification: A number of core terms conveying the concept of migration and integration were theoretically derived by literature review. For this purpose, the dictionary of Blätte and Wüst (2017) was used and extended. This word list and the 100 most relevant terms per topic were matched against each other. A topic with more than five hits from the dictionary was deemed relevant for migration and integration

- document identification: for each speech, the probability to belong to one of the identified topics was calculated. If the sum probability exceeded a threshold, the speech was considered relevant

- the relevant speeches were included in the

MigParlcorpus

3.2.1.2 Dictionary-Based Selection

- the MigPress dictionary (Blätte, Schmitz-Vardar, and Leonhardt 2020) was used to identify speeches in which at least five instances of the dictionary terms occur.

- this differs from the threshold of one occurrence used in the creation of the

MigPresscorpus. This is due to difference in language use in parliamentary settings and the greater average length of speeches compared to newspaper articles. - the relevant speeches were included in the

MigParlcorpus as well

The following section describes this sampling strategy in detail.

3.2.2 Topic Modelling

Topic Modelling is an unsupervised machine learning approach which is often used for cases like this: We want to classify documents of a collection, separating them into identifiable classes using the textual features of the documents and their manifestations in the collection. This data-driven approach is particularly useful in cases in which we are not sure which topics to expect in a corpus (Silge and Robinson 2019, chap. 6). The technical implementation of the topic modelling procedure is explained elsewhere. Importantly, we use the Latent Dirichlet allocation (LDA) as the approach to fit the topic models. With LDA we can estimate the topic probability for each document (Silge and Robinson 2019, chap. 6). Before fitting the model, we removed short documents (> 100 words) as well as noise (i.e. stop words, short words (shorter than three characters) and numbers). After stop word removal, we remove remaining rare words which occur less than 11 times and finally documents which are empty after the preprocessing. We fit the document with a k of 250 which proved to be reasonable for parliamentary data. Alpha is 0.2 in these cases.

3.2.2.1 Topic Selection

In the beta version of the MigParl corpus (build date 2018-11-27) we calculated a topic model for each regional parliament corpus and identified the relevant topics qualitatively by checking the 50 most relevant terms per topic by hand.

In this update, we still perform a topic model for each regional parliament. However, instead of evaluating 4000 topics manually, we used a predefined list of key terms to determine their relevance for the selection. The initial list was created by Blätte and Wüst (2017) comprising about 800 terms which were collected in a semi-supervised fashion, starting from a number of seed words and expanding on them by exploring composita which actually occur in the data.

3.2.2.2 A Dictionary to select topics

Using a list, we are confronted with a trade-off between precision (using a sparse amount of keywords to grasp a well-defined group of speeches) and a broad selection, consisting of more documents than we would want in a narrow definition. Diverging from the initial list by Blätte and Wüst (2017), we decided to use a broader definition, accepting false positives as we wanted to retain the possibility to filter the classification results afterwards. After labelling two topic models manually to establish a standard understanding of the relevance of a topic, we extended the list in an iterative process until the dictionary approach matched the human classification without introducing too many non-topical positives. To this end, we included a number of terms which are not necessarily referring exclusively to migration and integration and which were in part omitted from the initial list because of their ambiguity: “Leitkultur”, “Identität” and “Integration” describing cultural aspects, “Terror-,”Anschlag“,”Anschläge“,”Gefährder" and “Sicherheit-” as indicators of security discourse and “Ausland”, “ausländ-” and “Europa” to cover references to international contexts. Again, these terms can be characterised by a high degree of ambiguity and should not suggest a certain understanding of integration and migration. On the contrary, the inclusion these terms enables us to uncover the connection between migration and integration discourse on the one hand and adjacent topoi. This is something that would not be possible with a narrower dictionary.

Some words of the list were shortened by a hyphen, such as “Schmuggler-” (facilitators of (illegal) migration) which for example might also include “Schmugglerbande”. This possibility was implemented as well.

The resulting list is our dictionary:

3.2.2.3 Semi-Automatic Labelling

We test the approach with the initial list proposed by Blätte and Wüst (2017) extended by the words described above. As the central evaluation step, we want to compare the 100 most relevant words of each topic we modelled with this list. We will label the topic as relevant if at least five words of the list also occur in the 100 most relevant words of the topic.

Performed with the initial keyword list, we found 93 relevant topics. After checking for a last time if these topics seem reasonable we are content that this method improves reproducibility and transparency.

3.2.2.4 Annotating topic probabilities

The following chunks of code will separate an individual corpus into speeches and add the sum of the probability of those topics we identified as relevant as an structural attribute to each speech. We then can create a subcorpus of those speeches with a sum probability greater than a certain threshold.

3.2.2.4.1 Procedure

First, the s_attribute speech must be encoded into the existing corpus. In a second step, for each speech, the sum of the probabilities of those topics which are relevant for migration and integration research are encoded.

The following initial corpora are used.

Afterwards, we sum up the probability we calculated with the topic model, determining that a speech is indeed about migration and integration in some sense. To determine the relevant topics we used a dictionary approach described earlier.

3.2.2.4.2 Example from the Saarland

After resetting the registry, each speech in every corpus does have a migration and integration probability attached to it.

We will use this annotation in the following step to create subsets of relevant speeches from each corpus.

3.2.2.4.3 Creating thematic subcorpora

Now we want to use this probability to create a subset of each regional corpus which fit the criteria of our first, topic modelling driven approach. The question is which estimated proportion of words of a speech does have to belong to a topic relevant to migration and integration in order to be part of the MigParl corpus (Silge and Robinson 2019).

3.2.2.4.4 Which probability?

In the logic of LDA topic models, each document (here: speech) does belong to each topic in varying order. The following table shows the ten most important topics of a single speech. The column “No.” describes the topic number (entire range: 1 to 250). The column “Probability” indicates the probability of the speech to belong to the topic (range: 0 to 1). The column “Description” shows the five most probable terms for each topic.

In consequence, even speeches which have very little relevance to migration and integration related issues could have a probability greater that zero to be part of the topic. One essential question is how we create an optimal trade-off between false positives (speeches which do have a numeric probability greater than the threshold we set but are not relevant) and false negatives (the other way around). In terms of machine learning, this can also be described as the trade-off between precision (to which extent does every document in a thematic subcorpus actually belong there) and recall (how many documents of the base population which do belong to the subcorpus are actually part of it). When choosing the topics we are using an approach which values reproducibility and inclusiveness over accuracy. Hence, the dictionary approach uses a deliberatively broad word list with a great deal of ambiguity. A lot of words will be used in other contexts as well but we are more concerned to miss out on a topic than to include too many topics (in other words: when in doubt, we prefer recall over precision). Choosing a proper threshold is a way to balance both demands.

3.2.2.4.5 Trials

Since it is hard to tell a priori which would be a fitting threshold, we test it with the smallest parliament we have: The Saarland corpus.

3.2.2.4.5.1 Mean, max and min probabilities

Over all the mean probability of a speech to belong to migration and integration relevant topics is 0.008914 with a maximum value of 0.5154976 and a minimum value of 0. Given the very small mean value, it might be unreasonable to assume a very high threshold which would capture only a very small subset of speeches.

3.2.2.4.5.2 Proportions

In the following, we used several threshold values and calculated the proportion of the resulting subset to see if that is the case.

## A threshold of 0.02 returns 9.65 per cent of the corpus

## A threshold of 0.05 returns 5.11 per cent of the corpus

## A threshold of 0.1 returns 3.67 per cent of the corpus

## A threshold of 0.2 returns 1.93 per cent of the corpus

## A threshold of 0.3 returns 0.55 per cent of the corpus

## A threshold of 0.4 returns 0.05 per cent of the corpusThe proportion of the subset size and the corpus size varies from 9.65% with a threshold of 0.02 to 0.05% with a threshold of 0.4. Intuitively certainly more than one percent of the speeches held in a German parliament would be related to some form of migration and integration relevant topic. Hence, we qualitatively check whether the smaller thresholds (0.02, 0.05, 0.1 and 0.2) yield reasonable results in terms of relevant speeches returned.

3.2.2.4.5.3 Checking qualitatively

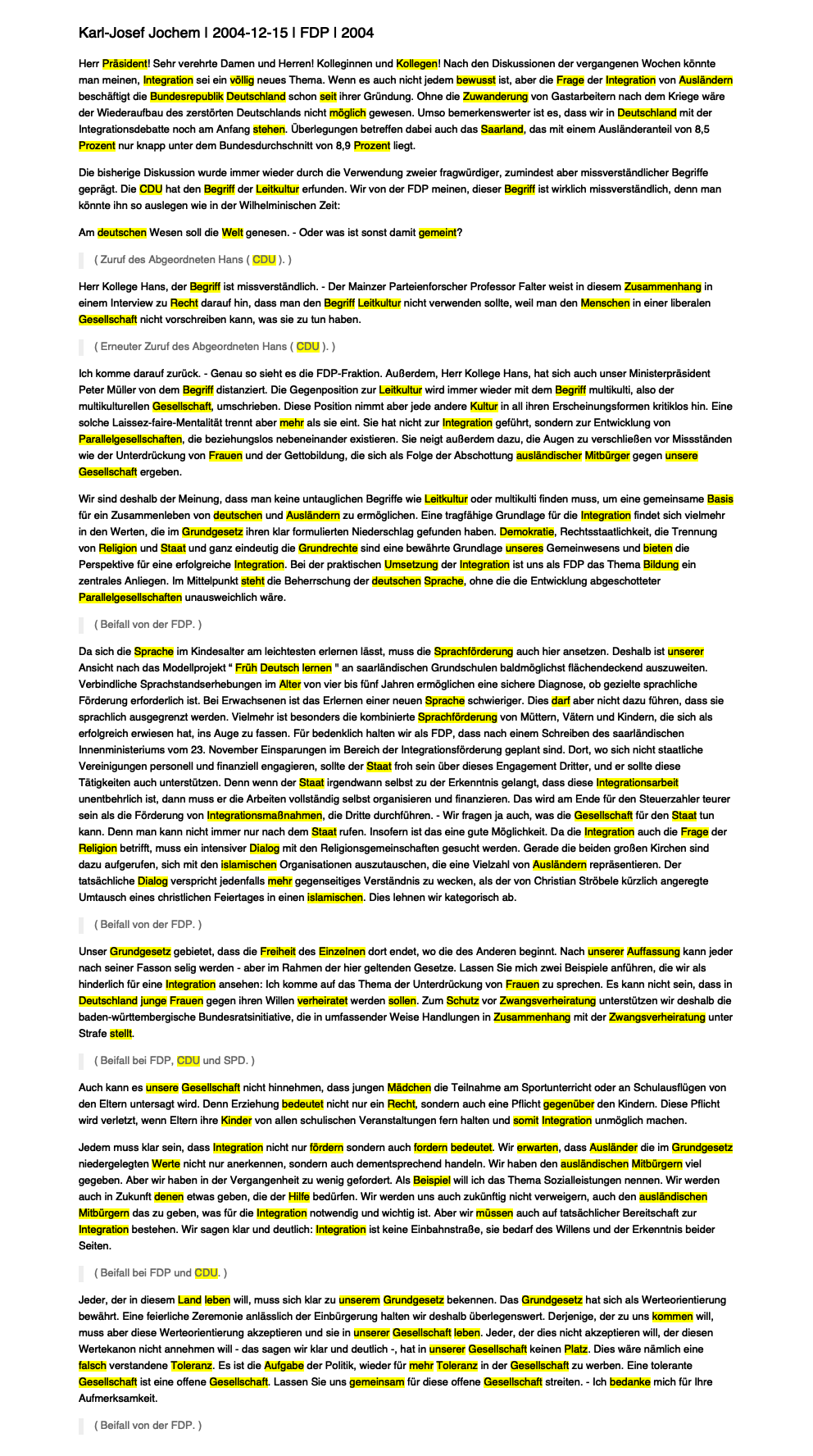

We can read a couple of speeches which were returned by different thresholds. To make the judgement easier, we highlight the words which were matched against the dictionary to determine relevance.

Note: To make this speech visible in both the html and the pdf version of this documentation, we embed an image instead.

Select Speech

3.2.2.5 Conclusion - Speeches with a Probability Threshold of 0.05

The smallest threshold of 0.02 which is twice the mean of the probabilities, is certainly not restrictive enough. Checking manually, we do have a lot of false positives. As for the highest threshold of 0.2 (which is the case for the speech shown above), we have only relevant speeches but at the same time we cover only a small portion of the initial corpus. Hence, we do expect a lot of false negatives as well. A threshold of 0.05 seems to be the best trade-off from this point of view. There is no guarantee that this is the case for all parliaments we subset but it is our best guess going forward.

We subset each regional corpus by the migration and integration probability. In addition, to account for the varying coverage of the regional state corpora, we limit the time range to 2000 to 2018. This safeguards comparability within the corpus as almost all of the regional states are part of the entire time span (apart from Saarland which only starts in 2005 and Rhineland-Palatinate which starts in 2001). In addition, this aligns MigParl with other resources of the MigTex project. To later combine the speeches found with this first topic modelling driven approach, we extract the speech names only at this point. The actual decoding will be performed later.

3.2.3 Dictionary Approach

3.2.3.1 Why a second approach?

In the documentation of the MigPress corpus of German newspaper articles concerned with migration and integration, a detailed report is given of how the topical relevance of an article was determined. Due to limited access to the data beforehand, a dictionary based approach was used. The same sampling strategy will be deployed on the parliamentary data in addition to the topic modelling based approach previously described. As both approaches do have their merits, we incorporate both sampling strategies in the MigParl corpus.

The dictionary-based approach first employed in the creation of the MigPress corpus and now transferred to MigParl might be considered theoretically more robust as the dictionary creation process starts from theoretical assumptions of what constitutes relevance for migration and integration research and is then expanded upon in a data-driven fashion. However, the process was also informed by a couple of rather specific limits of data access and the volume of the resulting corpus. Hence, a rather narrow definition of migration and integration relevance was chosen in the first place and then the task was to match all the textual manifestations of this definition as closely as possible. In consequence, the dictionary approach might be considered more transparent and its limited width might help to explain what the corpus comprises (and what it does not), while the aforementioned limits make the possibly desirable inclusion of articles addressing related issues not matched by the dictionary itself less feasible.

As we work under the assumption to have access to every available speech held in a German regional state parliament in the period of interest (see data report for further details), another form of data retrieval was chosen, as explained earlier. We deviate from the dictionary chosen in the creation of the MigPress corpus when selecting relevant topics because choosing a thematically broader, more issue focussed dictionary allows us to explicitly include topics which potentially are connected to core topics of migration and integration but are ambiguous and hence not covered by the concise dictionary of MigPress which is explicitly driven by terms connected to target populations. Technically, as we deploy the dictionary used for the topic selection on the 100 most relevant words of each topic, the dictionary used here is more suited for matching topical issue related terms as terms used for the description of target populations potentially cluster together in one topic per regional state. Ultimately, in contrast to the number of articles available for MigPress we do not face a hard limit for the number of speeches we can include. That being said, a result of this broader approach is that we might include more non-relevant speeches, i.e. noise into the data which is something researchers working with the data should be aware of. In addition, there are several decisions which influence the sampling process apart from the selection of relevant topics, such as the hyperparameters of the topic modelling process or which threshold to choose. These were explicated earlier.

In consequence, we consider it best to combine both approaches in the creation of MigParl and leave it to the researcher to decide which approach should be chosen, considering the reasoning explained above.

3.2.3.2 The MigPress dictionary

We use the dictionary developed and thoroughly explained in the MigPress documentation. In a nutshell, this dictionary is derived from the central assumption that the relevance of migration and integration always is connected with addressing a target population. Starting from some core terms, the key-term list is then expanded upon in a data-driven fashion.

_.*Asyl_, _.*Flüchtling_, _.*Flüchtlinge_, _.*Flüchtlingen_, _.*Flüchtlingslager_, _.*Flüchtlingspolitik_, _.*Migrant_, _.*Migranten_, _.*Migrantin_, _.*Migrantinnen_, _Abschiebe.*_, _Abschiebung.*_, Altfallregelung, Anerkennungsgesetz, Anerkennungsquote, Anerkennungsverfahren, _Ankerzentr.*_, _Anti-Asyl.*_, _Anti-Flüchtling.*_, _anti-semit.*_, _antiflüchtling.*_, _Antisemit.*_, Anwerbestopp, Arbeitsmigration, Assimilation, Assimilierung, _Asyl.*_, Aufenthaltsgenehmigung, _Aufenthaltsgesetz.*_, _Aufenthaltsrecht.*_, Aufenthaltsstatus, Aufenthaltstitel, Auffanglager, Aufnahmebereitschaft, _Aufnahmeeinrichtung.*_, Aufnahmefähigkeit, Aufnahmegesellschaft, _Aufnahmelager.*_, Aufnahmeland, _Aufnahmezentr.*_, _Ausbürger.*_, _Ausgewander.*_, _Ausländer.*_, _Auslandstürk.*_, _Ausreise.*_, _Außengrenze.*_, _Aussiedler.*_, _Auswand.*_, Balkan-Route, Balkanroute, BAMF, Binnenwanderung, _Biodeutsch.*_, Bleibeperspektive, _Bleiberecht.*_, Bundesvertriebenengesetz, Burka, _Burka-.*_, _Burkini.*_, Daueraufenthaltsrecht, _Deportation.*_, Desintegration, _Deutsch-Türk.*_, _deutsch-türkisch.*_, Deutschkenntnisse, Deutschkurs, _Deutschstämmig.*_, _Deutschtürk.*_, _Doppelpass.*_, _Doppelstaat.*_, _Drittstaat.*_, _Dublin-.*_, _Duldung.*_, _Ehegattennachzug.*_, _Ehrenmord.*_, _Einbürgerung.*_, _Eingebürger.*_, _eingereist.*_, _Eingewander.*_, Einreise, _Einwander.*_, _Emigrant.*_, _Emigration.*_, _Erstaufnahmeeinrichtung.*_, _EU-Asyl.*_, _EU-Diskriminierung.*_, _EU-Flüchtling.*_, EU-Türkei-Abkommen, _EU-Zuwander.*_, EURODAC, _Fachkräfteeinwanderung.*_, _Familiennachz.*_, Familienzusammenführung, _Flüchtende.*_, _Flüchtling.*_, Freizügigkeit, _Fremdenfeindlich.*_, FRONTEX, _Gastarbeiter.*_, _Geduldete.*_, _Geflohene.*_, _Geflüchtete.*_, _Grenzkontrolle.*_, Grenzpolitik, _Grenzschließung.*_, Grenzschutz, _Grenzschützer.*_, Grenzübertritt, _Grenzwächter.*_, _Heimatvertriebene.*_, Herkunftsland, Herkunftsländer, Herkunftsländern, Herkunftsstaaten, _Identitätsfeststellung.*_, _Imam.*_, _Immigrant.*_, _Immigration.*_, _Integrationsbeauftragte.*_, _Integrationsbemühungen.*_, _Integrationsdebatte.*_, Integrationsfähigkeit, _Integrationsgesetz.*_, _Integrationsgipfel.*_, _Integrationskonzept.*_, _Integrationskurs.*_, Integrationsland, _Integrationsmaßnahme.*_, _Integrationsminister.*_, Integrationsplan, Integrationspolitik, _Integrationsproblem.*_, _Islam.*_, _Kirchenasyl.*_, _Kopftuch.*_, _Kopftücher.*_, _Koran.*_, Lampedusa, Leitkultur, Massenmigration, Mehrheitsgesellschaft, Mehrstaatigkeit, Mehrstaatlichkeit, Menschenhändler, _Menschenschmugg.*_, _Migrant.*_, _Migration.*_, Migrationsgesellschaft, _Minarett.*_, Mitbürger, _Moschee.*_, _Moslem.*_, Multi-Kulti, _Multikult.*_, Multikulti, _Muslim.*_, _Nicht-Deutsch.*_, _Nichtdeutsch.*_, Nikab, Niqab, Optionspflicht, _Parallelgesellschaft.*_, _Passkontrollen.*_, Personenfreizügigkeit, Personenkontrollen, Punktesystem, _Rassis.*_, _Religionsunterricht.*_, Residenzpflicht, Roma, _Roma-.*_, Romani, _Rückführung.*_, Rumänen, _Russlanddeutsch.*_, _Sammelunterk.*_, Schengen, _Schlepper.*_, _Schleuser.*_, Schleusung, _Schutzbedürftig.*_, _Schutzsuchend.*_, Sea-Watch, Seenotrettung, Sinti, Sinto, _Spätaussiedler.*_, Sprachförderung, _Sprachkurs.*_, _Sprachtest.*_, _Sprachunterricht.*_, _Staatenlos.*_, _Staatsangehörig.*_, _Staatsbürgerschaft.*_, _subsidiäre.*_, Syrer, _Transitstaat.*_, Transitzonen, _Türkeistämmig.*_, _Türkischstämmig.*_, Umsiedlungsprogramm, _UN-Flüchtling.*_, unbegleitete, UNHCR, _Vertriebene.*_, _Visum.*_, _Völkerwanderung.*_, Vorrangprüfung, _Wanderungsbewegung.*_, _Weltflüchtling.*_, _Wiedereinreise.*_, _Willkommenskultur.*_, Wohnbevölkerung, Zigeuner, Zigeunerin, _Zugewander.*_, _Zugezogene.*_, _Zuwander.*_, _Zuzug.*_ and Zwangsheirat

3.2.3.3 Subsetting by term occurrence

In MigPress we use a rather soft criteria for article selection: We select an article if at least one of the terms of the dictionary occurs at least once in a given article. In previous tests with plenary data, this low threshold seemed too low as it apparently introduced a lot of false positives. This is not surprising, as the MigPress dictionary contains terms which are potentially more frequent and ambiguous in parliamentary speech (for example “Mitbürger” to address (parts of) the audience) and given that the average speech in the corpus is about twice as long as the average newspaper article (in terms of number of tokens). In consequence, it seemed acceptable to increase this threshold to five.

3.2.3.4 Decode selected speeches

In the previous steps, we determined which topics of our topic model are relevant, we calculated the probability that single speeches belong to these topics, we determined a fitting threshold probability to be considered in the subcorpus and finally identified relevant speeches accordingly. Additionally, we deployed a dictionary-based approach first used in MigPress to identify an additional set of speeches.

Now, these identified speeches have to be extracted from the regional state corpora. Then, they have to be merged and encoded into the MigParl corpus. The steps necessary here largely follow the vignette of the cwbtools package.

The output will be the MigParl corpus itself as a tarball which can be installed by cwbtools and used by polmineR.

Now we have individual token streams and metadata for each regional state. Unfortunately, memory gets scarce pretty fast, which at least slows the process down. That is why we do not try to keep the token streams in the RAM.

3.2.4 Creating the CWB corpus

We take these individual token streams and merge them to create the MigParl corpus. We add structural attributes to account for the sampling source of the individual speech (source_dict and source_topic_model) and the structural attribte calendar_week to make it easier to align the structural annotation of MigParl with MigPress.

References

Blätte, Andreas, and Andre Blessing. 2018. “The Germaparl Corpus of Parliamentary Protocols.” In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (Lrec 2018), edited by (Conference chair)Nicoletta Calzolari, Khalid Choukri, Christopher Cieri, Thierry Declerck, Sara Goggi, Koiti Hasida, Hitoshi Isahara, et al. Miyazaki, Japan: European Language Resources Association (ELRA).

Blätte, Andreas, Merve Schmitz-Vardar, and Christoph Leonhardt. 2020. “MigPress. A Corpus of Migration and Integration Related Newspaper Coverage.” https://polmine.github.io/MigPress.

Blätte, Andreas, and Andreas M. Wüst. 2017. “Der Migrationsspezifische Einfluss Auf Parlamentarisches Handeln: Ein Hypothesentest Auf Der Grundlage von Redebeiträgen Der Abgeordneten Des Deutschen Bundestags 1996–2013.” PVS Politische Vierteljahresschrift 58 (2): 205–33. https://doi.org/10.5771/0032-3470-2017-2-205.

Evert, Stefan, and Andrew Hardie. 2011. “Twenty-First Century Corpus Workbench: Updating a Query Architecture for the New Millennium.” In.

Manning, Christopher D., Mihai Surdeanu, John Bauer, Jenny Finkel, Steven J. Bethard, and David McClosky. 2014. “The Stanford CoreNLP Natural Language Processing Toolkit.” In Association for Computational Linguistics (Acl) System Demonstrations, 55–60. http://www.aclweb.org/anthology/P/P14/P14-5010.

Schmid, Helmut. 1995. “Improvements in Part-of-Speech Tagging with an Application to German.” In Proceedings of the Acl Sigdat-Workshop. Dublin, Ireland.

Silge, Julia, and David Robinson. 2019. “Text Mining with R: A Tidy Approach.” https://www.tidytextmining.com.